.jpg)

- Compliance Management

- ISO 42001

- 20th Nov 2025

- 1 min read

How to Implement ISO 42001 Using AI Governance Tools: Practical Steps for Responsible AI

- Written by

In Short..

TLDR: 4 Key Takeaways

- ISO 42001 turns AI principles into operations by defining an AI Management System (AIMS) with clear roles, Annex A controls, documentation, human-in-the-loop reviews, and auditability.

-

AI governance platforms make ISO 42001 workable at scale, replacing scattered spreadsheets with centralised controls, evidence, reviewer gates, and KPIs aligned to the EU AI Act.

-

Implementation follows five repeatable phases: Assessment, Planning, Implementation, Evaluation, and Continuous Improvement. Anchored by ownership, evidence, and traceable decisions.

-

ISO 42001 accelerates EU AI Act readiness, mapping Annex A themes to Act requirements and providing the operating model needed to prove oversight, risk management, and compliance.

ISO/IEC 42001 gives organisations a practical way to operationalise trustworthy AI by establishing an AI Management System built around governance, risk classification, Annex A controls, documentation, and measurable oversight. This guide shows how AI governance tools transform those requirements into day-to-day workflows. Centralising the AI inventory, mapping controls, enforcing human-in-the-loop approvals, and providing evidence and KPIs that stand up to audit. By following the five-phase implementation cycle and leveraging a purpose-built platform, teams can replace manual spreadsheets with defensible, repeatable governance processes. The result is a faster, more reliable path from policy to proof, strengthening both ISO 42001 alignment and EU AI Act compliance readiness.

Introduction

ISO/IEC 42001 is the first global AI governance standard. It shows you how to run an AI Management System (AIMS) with clear policies, roles, Annex A controls, documentation, metrics, and review cadence.

This guide explains how to implement ISO 42001 using AI governance tools so you move from policy to proof. We keep it practical: repeatable workflows, defensible audit trails, and measurable results. If you’re comparing ISO 42001 AI governance tools, choosing ISO 42001 compliance software, or looking at AI risk management tools, you’ll see where human-in-the-loop approvals, evidence, and KPIs fit day to day.

If the EU AI Act tells you what to do, ISO 42001 helps you define how to do it. Think of this as the handoff from the Act’s “what” to ISO 42001’s “how,” supported by an AI governance platform that centralizes controls and evidence and keeps reviews traceable.

Understanding ISO 42001 and Its Implementation Goals

What ISO 42001 covers (quick recap)

ISO/IEC 42001 defines the requirements for an AI Management System (AIMS):

- Governance and accountability (owners, decision rights, escalation)

- Risk management (identify systems, classify risk, plan mitigations)

- Ethics and transparency (explainability, user info, documentation)

- Human oversight (clear intervention points, HITL approvals)

- Robustness and security (testing, incidents, resilience)

- Continuous improvement (metrics, audits, corrective actions)

Why it matters for EU AI Act readiness

For high-risk AI, the Act expects strong documentation, oversight, and traceability. ISO 42001 implementation gives you the operating model that supports EU AI Act alignment (clear roles, mapped controls, and evidence you can show) without claiming the standard alone equals legal compliance.

How the standard turns principles into practice

- Inventory and classification: keep a live register of AI systems with purpose, data, owners, and risk class (including EU AI Act category)

- Control mapping once, reuse many times: apply Annex A controls at system and common-control levels to cut duplicate work across frameworks

- Human-in-the-loop where it counts: require reviewer approval for any status-changing step (risk class, releases, exceptions) and keep a full audit trail

- Documentation and evidence: centralize policies, procedures, model cards, data lineage notes, and log exports so the same artifacts serve audits and regulators

- Measurement: track throughput, cycle time, first-pass acceptance, rework percentage, and issue closure to drive continuous improvement

- Auditability by design: link every summary, classification, and decision to its sources and reviewers

Implementation goals (use these as acceptance criteria)

- Accountability: named owners, defined decision rights, documented governance cadence

- Transparency: citable sources, explainable rationales, complete change history

- Risk posture: controls matched to risk; timely detection and resolution of issues

- Continuous improvement: KPIs inform corrective actions and measurable gains

The Five Phases of ISO 42001 Implementation

Use this ISO 42001 implementation cycle to operationalize your AI Management System (AIMS) and support EU AI Act compliance with an AI governance platform.

|

Phase |

Objective |

Example Tasks |

SureCloud Support |

|

1) Assessment |

Build your AI estate and risk view |

Create an AI system inventory; map owners and stakeholders; classify risk and EU AI Act category; note current controls and gaps |

AI Risk Register with owner assignment and risk taxonomy; inventory fields aligned to Annex A controls |

|

2) Planning |

Set governance and targets |

Draft/update AI policy; map Annex A controls (common + system level); assign control owners; set human-in-the-loop (HITL) gates and KPIs |

Policy & control libraries; cross-framework mapping; workflows/RACI; reviewer gates with audit trails |

|

3) Implementation |

Put controls and evidence in place |

Apply controls; stand up a single evidence model; enable change control and logging; link artifacts (policies, procedures, model cards, lineage, log exports) |

Automated tasking; centralized evidence repository; immutable logs for summaries, citations, and approvals |

|

4) Evaluation |

Measure and audit performance |

Run internal audits; track throughput, cycle time, first-pass acceptance, rework %, and exceptions; manage issues and actions |

KPI dashboards; reviewer-adherence reports; issue/action management; exportable audit packages |

|

5) Continuous Improvement |

Keep the AIMS current |

Schedule reviews (prioritize high-risk AI); re-assess risk on change; refine controls/procedures; update training and docs |

Scheduled reviews; change logs; maturity tracking and trend reports; quick owner reassignment |

Keep this constant across all phases: human-in-the-loop approvals for any status change, centralized documentation and evidence, and end-to-end audit trails—core capabilities of modern AI governance tools.

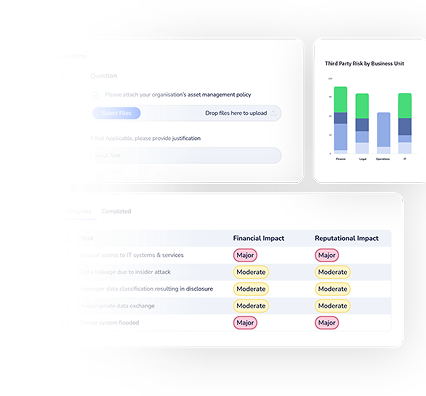

Leveraging AI Governance Tools for ISO 42001 Success

Spreadsheets can’t keep pace with ISO 42001 implementation. As your AI portfolio grows, versions fork, evidence scatters, and approvals slip. An AI governance platform centralizes owners, Annex A controls, evidence, and reviewer gates; so your AIMS is easier to run, audit, and keep aligned to the EU AI Act.

Why manual spreadsheets no longer work

- No single source of truth; versions drift across teams

- Human-in-the-loop approvals are hard to enforce or prove

- Evidence sits in folders and email; audit trails are incomplete

- Cross-framework reuse breaks; mappings don’t stay in sync

- Leaders lack live KPIs (throughput, cycle time, first-pass acceptance, rework %)

Core features of an AI governance platform (what to require)

- Risk classification engine: Tag each AI system with purpose, data sensitivity, owners, internal risk, and EU AI Act category to drive consistent reviews and escalation

- Control mapping to Annex A: Reusable Annex A controls at common-control and system level; track applicability, exceptions, and linked evidence to cut duplicate work

- Documentation & evidence storage: One place for policies, procedures, model cards, data lineage notes, and log exports with citations and immutable audit trails

- Real-time compliance dashboards: See throughput, cycle time, first-pass acceptance, rework percentage, open issues, and reviewer adherence; drill down to artifacts

- Continuous control monitoring (automation): Schedule checks and alerts for priority controls to support your AIMS. Treat monitoring as automation; any status change still needs reviewer approval

Pro tip: Keep AI assistance (drafting, summarization, classification, mapping) separate from automation (scheduled checks/alerts). Status changes should always be human-approved.

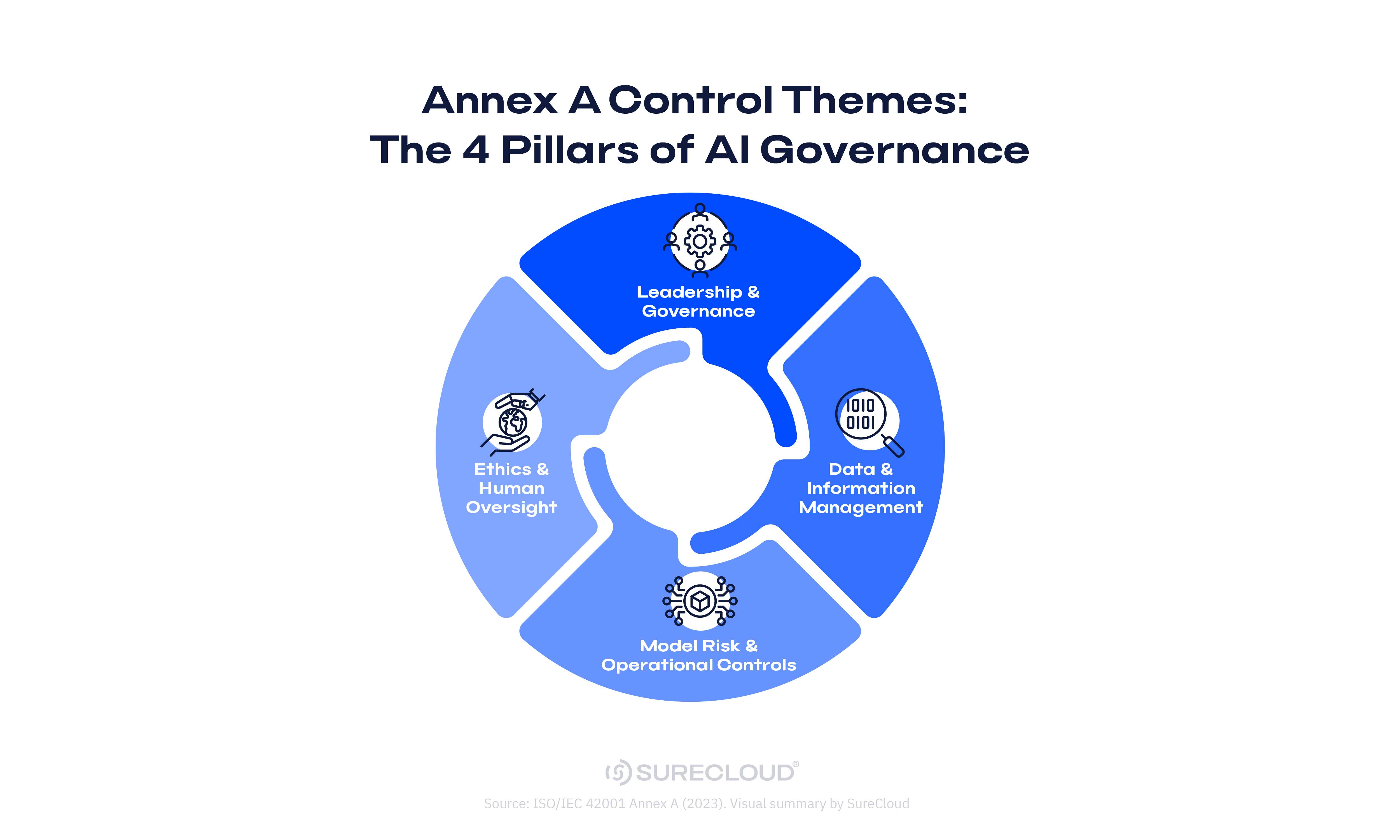

Mapping ISO 42001 Controls to the EU AI Act

ISO/IEC 42001 gives you the operating model. The owners, Annex A controls, evidence, and human-in-the-loop reviews that the Act expects, especially for high-risk AI. It speeds up EU AI Act alignment, but it isn’t a legal shortcut. You still need to confirm obligations for each system and keep proof up to date.

ISO 42001 ↔ EU AI Act Alignment

|

ISO 42001 Annex A theme |

EU AI Act risk category |

EU AI Act article |

Control focus (ISO 42001 implementation) |

Evidence (attach in your AI governance platform) |

|

Governance and accountability |

High-risk |

Art. 9 — Risk management |

Charter an AI governance committee; assign system/control owners; define decision rights and escalation paths; document risk processes |

Committee charter and minutes; RACI/owner registry; approved policy; risk methodology and records |

|

Data and model quality |

High-risk |

Art. 10 — Data governance |

Set data-quality criteria; record lineage; document preprocessing; run bias/representativeness checks; approve training/validation sets |

Dataset approval log; lineage notes; quality/bias checklists; test reports; model cards |

|

Human oversight |

High-risk |

Art. 14 — Human oversight |

Place reviewer gates for any status change (risk class, releases, exceptions); define override mechanisms and separation of duties |

Reviewer sign-off logs; override procedure; approval timestamps; SoD matrix |

|

Transparency and documentation |

High-risk |

Art. 13 — Information to users |

Maintain model cards; user-facing information (purpose, limits); keep technical documentation and usage logs |

Model cards; user disclosures; technical documentation index; log exports |

|

Robustness, accuracy, and security |

High-risk |

Art. 15 — Accuracy, robustness, cybersecurity |

Define test plans; validate performance against thresholds; track defects; manage incidents and corrective actions |

Test plans and results; defect tracker; incident reports; CAPA records |

|

Record-keeping and logging |

High-risk |

(Supports Arts. 9–15) |

Standardize audit trails for prompts, sources, reviewers, and decisions; retain logs per policy |

Centralized audit trail; retention policy; change control logs |

|

Post-market monitoring and improvement |

High-risk |

(Ongoing obligations) |

Schedule periodic reviews; monitor issues; refine controls and documentation; update training |

Review cadence schedule; improvement log; updated procedures and training records |

Use this crosswalk to show EU AI Act alignment during ISO 42001 implementation: classify systems, map applicable Annex A controls (common and system level), attach evidence, verify human-in-the-loop gates, and export the package from your AI governance platform.

Common Implementation Challenges

Lack of ownership and cross-functional engagement

- Symptoms: orphaned controls, slow reviews, unclear decision rights; policy, risk, security, legal, and product working in silos

- What to do: stand up an AI Governance Committee, publish a system/owner registry, define RACI, and place human-in-the-loop (HITL) gates anywhere status can change (risk class, control status, releases, exceptions)

- Tool-based solution (e.g., SureCloud): owner fields on every AI system and Annex A control; workflow routing to named reviewers; SLA reminders; reviewer-adherence dashboards; end-to-end audit trails

- Expert tip: start with your top ten high-risk AI systems; show owner names and review SLAs on the dashboard to drive accountability and EU AI Act alignment

Overlapping frameworks (ISO 27001, NIST AI RMF, GDPR)

- Symptoms: duplicate work, conflicting templates, scattered evidence, inconsistent wording

- Fix (process): use a common-control library—map once to Annex A controls and reuse across frameworks; record justified exceptions; keep one glossary/taxonomy

- Tool-based solution (e.g., SureCloud): cross-framework mapping and inheritance; tags for EU AI Act category; single evidence record referenced by multiple obligations; “delta” views showing what each framework adds

Expert tip: lock IDs for controls and evidence types before you import content. Consistent IDs make ISO 42001 implementation measurable and auditable

Underestimating documentation complexity

-

Symptoms: model cards, data lineage, logs, and approvals live in different drives; audits become scavenger hunts

-

Fix (process): define a standard evidence model (policy IDs, procedures, model cards, lineage notes, log exports, decisions); require citations for summaries; formalize change control for prompts, releases, and exceptions

-

Tool-based solution (e.g., SureCloud): centralized document/evidence store linked to systems and controls; AI-assisted drafting/summarization with sources; immutable audit trails for reviewer sign-off; exportable audit packages

-

Expert tip: keep a short “golden” checklist for each control family (what artifact, where it lives, who owns it). Review it monthly as part of your AIMS cadence

.png?width=600&height=349&name=Rectangle%2044%20(1).png)

From Policy to Proof: Your Next Steps

ISO 42001 implementation works best with tool support. An AI governance platform turns intent into day-to-day operations: shared Annex A controls, central evidence, human-in-the-loop approvals, change control, and audit trails. That gives you measurable outcomes and faster EU AI Act alignment.

Do this next:

- Request a 20-minute demo with SureCloud: See AI-assisted drafting, reviewer workflows, and audit trails in action with our AI governance tools

- Read the EU AI Act 2025 Complete Compliance Guide: Connect the Act’s “what” to ISO 42001’s “how”

Planning how to implement ISO 42001 at scale?

FAQ’s

How long does ISO 42001 implementation take?

Most teams finish assessment and planning in 4–8 weeks, then roll out controls, evidence, and reviews over the next 1–2 quarters. Scope, team size, and existing documentation drive the timeline. An AI governance platform (your ISO 42001 compliance software) speeds up owner assignment, Annex A controls mapping, and audit-ready audit trails. Use the ISO 42001 checklist to size the work and track progress.

Do you need certification to comply?

No. You can operate an ISO 42001-aligned AI Management System (AIMS) without formal certification and still demonstrate EU AI Act alignment. Many organizations stabilize their program first, then decide whether to start the ISO 42001 certification process (e.g., pre-assessment, stage 1/2 audits).

What tools help track ISO 42001 controls?

Look for ISO 42001 AI governance tools that provide: an AI inventory and risk classification engine, reusable Annex A mapping, centralized documentation and evidence storage, human-in-the-loop reviewer workflows, change control logging, and real-time compliance dashboards. In short: an AI governance platform plus AI risk management tools to keep decisions, evidence, and metrics in one place.

How does this relate to EU AI Act readiness?

Think “what” vs. “how.” The EU AI Act sets obligations (especially for high-risk AI). ISO 42001 implementation is the “how”; the operating model of owners, controls, evidence, and reviews. Map your Annex A controls to Act requirements, record risk category, keep HITL approvals, and link proof. That’s how to implement ISO 42001 and show credible EU AI Act alignment.

Explore SureCloud’s GRC for AI Governance Resources

“In SureCloud, we’re delighted to have a partner that shares in our values and vision.”

Read more on how Mollie achieved a data-driven approach to risk and compliance with SureCloud.

“In SureCloud, we’re delighted to have a partner that shares in our values and vision.”

Read more on how Mollie achieved a data-driven approach to risk and compliance with SureCloud.

“In SureCloud, we’re delighted to have a partner that shares in our values and vision.”

Read more on how Mollie achieved a data-driven approach to risk and compliance with SureCloud.

Reviews

Read Our G2 Reviews

4.5 out of 5

"Excellent GRC tooling and professional service"

The functionality within the platform is almost limitless. SureCloud support & project team are very professional and provide great...

Posted on

G2 - SureCloud

5 out of 5

"Great customer support"

The SureCloud team can't do enough to ensure that the software meets our organisation's requirements.

Posted on

G2 - SureCloud

4.5 out of 5

"Solid core product with friendly support team"

We use SureCloud for Risk Management and Control Compliance. The core product is strong, especially in validating data as it is...

Posted on

G2 - SureCloud

4.5 out of 5

"Excellent support team"

We've been happy with the product and the support and communication has been excellent throughout the migration and onboarding process.

Posted on

G2 - SureCloud

Product +

Frameworks +

Capabilities +

Industries +

Resources +

London Office

1 Sherwood Street, London, W1F 7BL, United Kingdom

US Headquarters

6010 W. Spring Creek Pkwy., Plano, TX 75024, United States of America

© SureCloud 2026. All rights reserved.

.png)

.jpg)