%20(2).webp)

- Compliance Management

- 21st Nov 2025

- 1 min read

NIST AI RMF vs ISO 42001 – Which Framework Fits Your Organization?

- Written by

In Short..

TLDR: 4 Key Takeaways

-

NIST AI RMF gives you flexibility and speed, offering voluntary, principle-based guidance to identify and manage AI risks across the lifecycle without prescribing a strict operating model.

-

ISO/IEC 42001 provides a certifiable AI Management System (AIMS) with defined roles, Annex A controls, documentation requirements, and a review cadence that produces audit-ready proof.

-

Both frameworks overlap on core responsible AI principles—trust, transparency, data quality, oversight, and continuous improvement—so work done in one can be reused in the other.

-

Choosing the right framework depends on your regulatory pressure, maturity, objectives, and customer expectations—and many organisations benefit from using NIST as the methodology and ISO 42001 as the system.

NIST AI RMF and ISO/IEC 42001 solve different governance needs but share the same foundation: structured, transparent, and risk-informed AI. NIST provides a flexible, fast-to-adopt approach ideal for emerging AI programmes or teams focused on learning and adapting quickly. ISO 42001 goes further by defining a formal management system with prescribed controls, documentation, and audit-ready evidence suited to regulated sectors or organisations seeking certification.

In practice, the two frameworks complement each other: NIST shapes your risk mindset while ISO 42001 turns it into a repeatable, traceable operating model. Selecting the right option hinges on where you operate, what your customers expect, and how much assurance you need to demonstrate today.

Introduction

AI governance has shifted from “should we?” to “how do we run this well—every day?” Regulators, standards bodies, and enterprises are converging on structured ways to manage AI risk, document decisions, and demonstrate trust. In 2026, with the EU AI Act moving into enforcement and a wider global patchwork of expectations, selecting an AI governance framework gives teams shared language, clear roles, and evidence they can show to customers and auditors.

Two leading options anchor that choice:

- NIST AI Risk Management Framework (NIST AI RMF): US–developed, voluntary, risk-based guidance you tailor to your context.

-

ISO/IEC 42001 (ISO 42001)

: A global, certifiable management system standard for running an AI Management System (AIMS) with defined roles, controls, documentation, and review cadence.

This guide explains what each framework is, why frameworks matter now, and how the two compare in purpose, structure, implementation effort, and proof. Most importantly, it sets up the decision you need to make: Which framework fits your organization’s goals, obligations, and maturity—and when does a combined approach make sense?

What Is the NIST AI Risk Management Framework?

The NIST AI RMF (2023) is a voluntary, principle-driven AI governance framework from the U.S. National Institute of Standards and Technology. It helps organizations identify, analyze, and manage AI risks across the lifecycle without prescribing a single operating model or certification path.

Structure (four core functions):

- Govern: Define policies, roles, decision rights, escalation, and accountability for AI systems.

- Map: Understand context and intended use; build an AI system inventory; document affected users, constraints, and potential impacts.

- Measure: Plan and run evaluations (e.g., robustness, bias, security, reliability); capture metrics, evidence, and reviewer feedback so findings are explainable.

- Manage: Prioritize risks, apply mitigations, record decisions, and schedule reviews so improvements stick.

Emphasis:

Trustworthy AI through systematic risk identification and mitigation; transparency, documentation, human oversight, and learning from results.

Nature:

Voluntary and principle-driven; no certification requirement.

Who it’s for:

Organizations (often U.S.-based or global with U.S. ties) that want flexibility; AI developers, product/risk owners, and program leads who need a common risk language across fast-moving use cases.

Key benefit:

Flexibility and customization. Start quickly, scale depth by risk, and adapt the cadence to your portfolio.

What you produce day-to-day:

A living AI inventory, risk registers, evaluation plans and logs, issue/action tracking, reviewer sign-offs, and post-deployment monitoring notes; lightweight artifacts that are easy to evolve and later reuse if you formalize under ISO 42001.

What Is ISO/IEC 42001?

Published in December 2023 by ISO/IEC JTC 1/SC 42, ISO/IEC 42001 defines how to run an AI Management System (AIMS)—the organizational operating model for governing AI—similar to how ISO 27001 governs information security. It turns principles into daily practice with clear roles, policies, controls, documentation, and a review cadence you can audit.

Structure (Plan–Do–Check–Act):

- Governance & leadership (Plan): set AIMS scope, leadership commitment, roles, decision rights, objectives, and risk criteria.

- Operate controls (Do): implement documented policies and procedures across data, models, lifecycle stages, suppliers, and change management.

- Assure performance (Check): measure outcomes, run internal audits, review incidents and nonconformities, and track KPIs.

- Improve (Act): corrective actions, management review, and continual improvement.

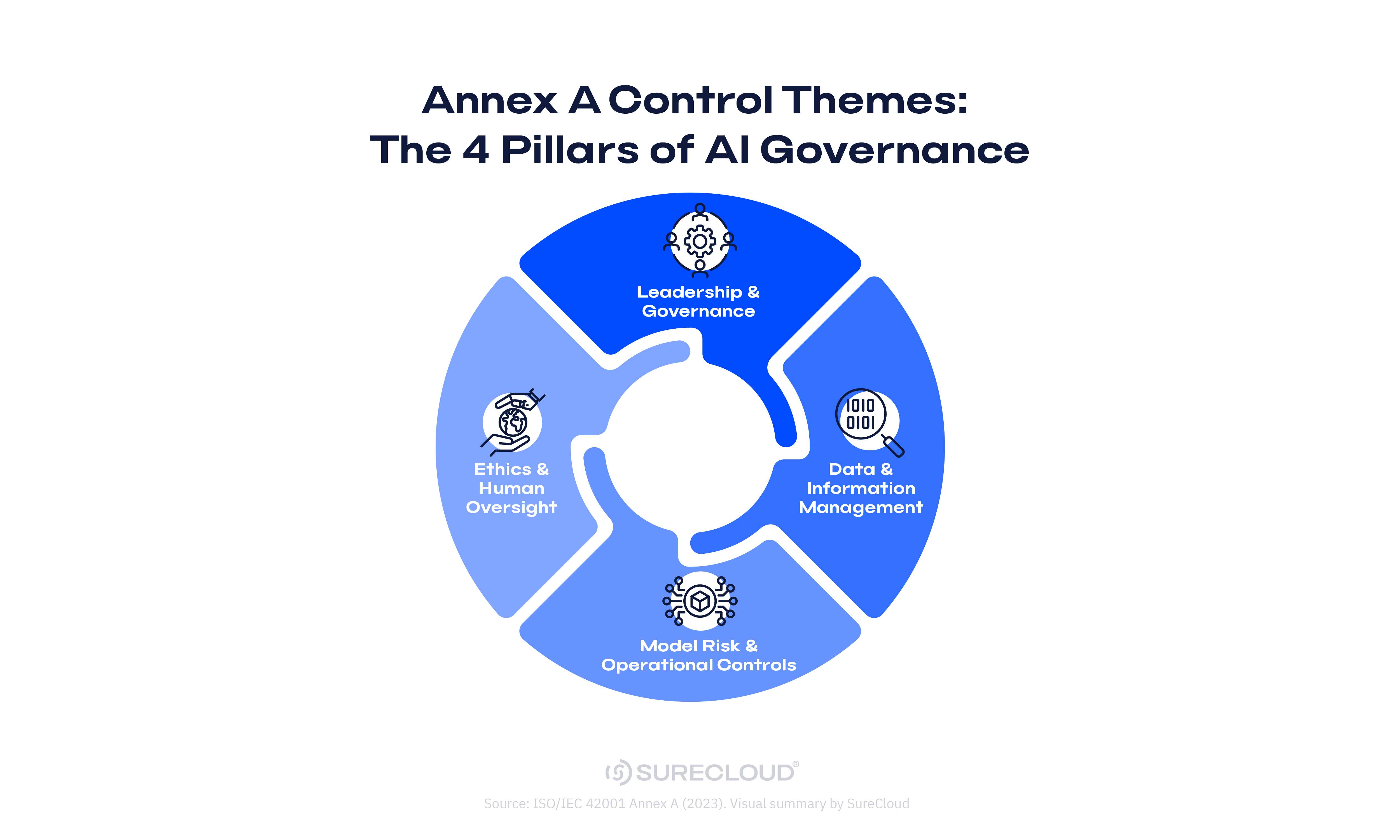

Control framework:

An Annex A control set you tailor to scope and risk. Typical artifacts: policies, procedures, model cards, data lineage notes, test plans/results, incident records, approvals for status changes, and audit logs.

Certification:

Certifiable via independent audit (Stage 1 readiness, Stage 2 effectiveness, then surveillance/recertification). Certification provides external assurance to customers and regulators.

Best suited for:

Regulated industries or any organization that needs third-party validation, structured documentation, and audit-ready evidence at scale.

NIST vs ISO 42001 — Head-to-Head Comparison

|

Dimension |

NIST AI RMF |

ISO/IEC 42001 |

|

Nature & purpose |

Voluntary guidance to identify, assess, and manage AI risk. |

Certifiable management system (AIMS) to govern AI across the organization. |

|

Scope & audience |

US-developed, principle-based; widely adopted globally by product, risk, and engineering teams. |

Global standard; strong fit for enterprises, regulated sectors, and audit-mature programs. |

|

Structure |

4 functions: Govern, Map, Measure, Manage (adapt by context and risk). |

PDCA cycle with documented processes and Annex A controls. |

|

Certification |

None (self-assessment and continuous improvement). |

Yes, independent audit and certificate. |

|

Governance model |

Flexible and decentralized; roles defined as needed. |

Formal roles, leadership commitment, policies, records, review cadence. |

|

Controls & documentation |

Suggested practices/profiles; customize depth by risk. |

Prescriptive control set (Annex A); required evidence and traceability. |

|

Regulatory fit |

Aligns to many policies; can support EU AI Act needs when tailored. |

Supports EU AI Act readiness via structure, roles, controls, and documentation (not a legal substitute). |

|

Implementation effort |

Lower overhead; faster start; evolve as portfolio grows. |

Higher ongoing effort; internal audits, metrics, and continual improvement. |

|

Outcome |

Risk-informed culture and shared language for trustworthy AI. |

Audit-ready proof and certified governance assurance. |

|

Best for |

Earlier-stage or innovation-led programs needing flexibility. |

Organizations needing external assurance or customer/regulatory validation. |

Where They Overlap

Both frameworks reflect the same responsible AI standards: trust, accountability, transparency, data quality, bias mitigation, and human oversight. Implemented well, either path gives you a repeatable way to govern risk, document decisions, and show proof.

Shared principles

- Trust and accountability

- Transparency and clear documentation

- Data quality and bias mitigation

- Human oversight with defined intervention points

Both encourage

- Documented risk processes across the full AI lifecycle

- Lifecycle governance with named owners, decision rights, and review gates

- Continuous monitoring, measurement, and periodic review (not one-and-done)

A practical takeaway: Work you do under one framework often transfers to the other. For example, model evaluations and reviewer notes logged under NIST can serve as evidence inside an ISO 42001 AIMS, and ISO’s role definitions can formalize the accountability NIST calls for.

How They Differ in Implementation

|

Element |

NIST AI RMF Approach |

ISO/IEC 42001 Approach |

|

Intake & inventory |

Stand up a lightweight intake and AI system inventory tailored to context and risk. |

Maintain a defined AIMS scope and formal inventory with owners, purpose, risk attributes, and status. |

|

Risk management |

Context-driven identification and assessment; choose methods per use case. |

Formal risk register with owners, treatments, verification, and residual-risk tracking. |

|

Controls |

Suggested practices and profiles; select what fits and calibrate depth by risk. |

Prescriptive Annex A control set; mark applicability, assign owners, implement, and verify. |

|

Documentation & evidence |

Recommended for transparency and learning; flexible artifact set. |

Required artifacts for auditability (policies, procedures, model cards, data lineage, test logs, approvals). |

|

Human oversight & approvals |

Emphasizes accountability; define reviewer steps where they matter most. |

Explicit reviewer gates (human-in-the-loop) for status changes; separation of duties and sign-off trails. |

|

Testing & metrics |

Choose and iterate evaluations (robustness, bias, security, reliability); track results. |

Define KPIs and run internal audits (e.g., throughput, cycle time, first-pass acceptance, rework percentage). |

|

Audit & review cadence |

Self-assessment and continuous improvement at a cadence you set. |

Management reviews, internal audits, and external audits for certification and surveillance. |

|

Change control & traceability |

Capture decisions and updates within the Govern/Manage cycle. |

Formal change control with immutable logs linking decisions to sources, reviewers, and evidence. |

|

Suppliers & third parties |

Incorporate vendor risk into mapping and measurement as needed. |

Define third-party requirements and evidence explicitly within the AIMS (policies, controls, due diligence records). |

|

Roles & accountability |

Flexible, decentralized ownership; adapt roles by use case. |

Formal leadership commitment, named owners, RACI, and documented decision rights. |

|

Tooling |

Any workflow that supports Govern–Map–Measure–Manage; lighter overhead. |

Platform support to run an AIMS: control mapping, evidence model, reviewer workflows, and exportable audit packages. |

|

Proof / assurance |

Show artifacts, metrics, and improvement over time. |

Show audit-ready proof and (optionally) a third-party certificate. |

What this means in practice: NIST gives you speed and flexibility to pilot governance on a few priority systems and evolve quickly. ISO 42001 asks for the same kinds of activities but makes them systematic and auditable from day one—useful when customers or regulators expect formal assurance.

Choosing the Right AI Compliance Framework

Use this decision checklist to match your context to the right AI compliance framework—fast.

Geography & regulation

- Primarily U.S. footprint, lighter formal assurance pressure → NIST AI RMF (voluntary, risk-based).

- EU/global exposure, buyer or regulator audits → ISO/IEC 42001 (certifiable AIMS and audit-ready proof).

Maturity

- Early-stage or innovation-led program → NIST AI RMF (start quickly; scale depth by risk).

- Process-mature, compliance-driven enterprise → ISO 42001 (roles, Annex A controls, documented cadence).

Objectives

- Build trust and a shared risk language across teams → NIST AI RMF.

- Demonstrate external assurance/certification to customers and regulators → ISO 42001.

Practical guidance

- If your near-term need is speed and internal alignment, begin with NIST AI RMF on a small set of high-impact systems.

- If procurement or regulators already ask for certificates or mapped controls, prioritize ISO 42001 with a defined scope, then widen annually.

- If you’re on the fence, pilot NIST on the same systems you plan to certify, so artifacts transfer cleanly into the AIMS.

.jpg?width=800&height=480&name=2500x1500-article-tile-hero%20(2).jpg)

Can You Use Both Together?

Yes. Many organizations pair NIST AI RMF (the risk methodology) with ISO/IEC 42001 (the management system). Think: NIST = the mindset; ISO 42001 = the system,so language, controls, and proof stay consistent.

How they combine in practice

- Govern → feeds ISO 42001 governance: policies, roles, decision rights, review cadence.

- Map → becomes your AIMS inventory and context: systems, intended use, stakeholders, applicable Annex A controls.

- Measure → supplies tests/metrics (robustness, bias, security, reliability) that serve as ISO 42001 evidence.

- Manage → drives corrective actions, change control, and continual improvement inside the AIMS.

Why this helps

- Reduces duplicate work: one taxonomy for controls and evidence, one approval trail, one audit package.

- Keeps flexibility where you need it (NIST) while adding assurance where it’s required (ISO 42001).

How SureCloud Supports Both

SureCloud’s GRC for AI Governance works with leading AI governance tools and practices, so you can run NIST AI RMF, ISO/IEC 42001, or both without duplicating effort. It maps NIST’s Govern–Map–Measure–Manage activities to ISO 42001 roles, Annex A controls and records; keeps a single AI system inventory with owners and risk attributes; links policies, procedures, model cards, data lineage notes, test logs, and sign-offs to the right control; and enforces human-in-the-loop approvals and change control with exportable audit trails.

Map NIST to ISO 42001 once—reuse everywhere

- Align NIST activities to ISO 42001 roles, controls, and records so work is done once and surfaced in both views.

- Maintain one AI system inventory with owners, intended use, and risk attributes; tag items to NIST functions and Annex A controls simultaneously.

- Keep one taxonomy for artifacts (policies, procedures, model cards, data lineage notes, test logs, incident records) so evidence travels cleanly between frameworks.

Automate evidence capture and keep it audit-ready

- Centralize documents and link them to specific controls or NIST functions; preserve citations and reviewer sign-offs.

- Record change control (who changed what, when, and why) with immutable audit trails that export into audit packages.

- Support human-in-the-loop approvals for any status change (risk class, control status, exceptions, releases).

Monitor risks, ethics metrics, and program progress

- Dashboards show inventory coverage, open risks, reviewer adherence, and program KPIs (throughput, cycle time, first-pass acceptance, rework percentage).

- Drill from KPI to artifact to reviewer trail in a click, so management reviews and internal audits are fast and traceable.

.png?width=600&height=349&name=Rectangle%2044%20(1).png)

Choose Your Path, Then Operationalize Proof

“In SureCloud, we’re delighted to have a partner that shares in our values and vision.”

Read more on how Mollie achieved a data-driven approach to risk and compliance with SureCloud.

“In SureCloud, we’re delighted to have a partner that shares in our values and vision.”

Read more on how Mollie achieved a data-driven approach to risk and compliance with SureCloud.

“In SureCloud, we’re delighted to have a partner that shares in our values and vision.”

Read more on how Mollie achieved a data-driven approach to risk and compliance with SureCloud.

Reviews

Read Our G2 Reviews

4.5 out of 5

"Excellent GRC tooling and professional service"

The functionality within the platform is almost limitless. SureCloud support & project team are very professional and provide great...

Posted on

G2 - SureCloud

5 out of 5

"Great customer support"

The SureCloud team can't do enough to ensure that the software meets our organisation's requirements.

Posted on

G2 - SureCloud

4.5 out of 5

"Solid core product with friendly support team"

We use SureCloud for Risk Management and Control Compliance. The core product is strong, especially in validating data as it is...

Posted on

G2 - SureCloud

4.5 out of 5

"Excellent support team"

We've been happy with the product and the support and communication has been excellent throughout the migration and onboarding process.

Posted on

G2 - SureCloud

Product +

Frameworks +

Capabilities +

Industries +

Resources +

London Office

1 Sherwood Street, London, W1F 7BL, United Kingdom

US Headquarters

6010 W. Spring Creek Pkwy., Plano, TX 75024, United States of America

© SureCloud 2026. All rights reserved.

.png)

.jpg)